The summer is a great opportunity to review event materials and see how things are going as part of the longer tail of your amplified event. I have just started a summer project examining the return on investment for event amplification as a support service for event organisers, and decided to start with the low hanging fruit: event video footage.

Most of the event amplification plans that I put into action involve video in some way, usually in the form of recording presentations or conducting video interviews. I tend to use Vimeo or YouTube to host these videos, which means that detailed viewing statistics are publicly available. However, upon reviewing many of these videos, I am seeing a worrying trend towards low viewer numbers over time for many of these resources.

Vimeo statistics dashboard for a reasonably healthy video. Few look like this!

There might be many reasons for this: the videos may be very long and therefore represent too great a time investment for the target audience to watch, the videos may be too specialist in nature, they may have been intended for a very small audience in the first place, or they may not have been used or promoted by the client after delivery.

The latter leads me to worry that organisers are often not thinking longer term about their event materials and how they can be amplified over time. Once I have amplified the live event and provided all of the post event materials, my invoice is paid and technically I have no influence over how the materials are used or exploited after this point. It is then down to the event organiser to make the most of those materials, which may not be practical for many reasons. I may need to change my business model to combat this, perhaps offering longer term amplification after an event, or more consultancy for clients to help them to make the most out of their event materials.

How should we measure video ROI?

I referred to video content as low hanging fruit in terms of return on investment. This is because of all my amplification activities, this is the easiest one to relate directly to cost. I know how much it cost the client for me to produce the videos, so I can divide this by the number of views to calculate the cost per view for the video. Simple. As the number of views increases over time, the cost per view decreases and the videos therefore become better value for the client.

However, as with a live video stream, the organiser needs to clearly define what success looks like for each individual video they request in order to provide a benchmark against which we can measure the success of that video. Success for an hour long lecture in an extremely niche subject area may be only 5 or 6 views, provided those viewers are the right people. A 40-minute talk by a high profile keynote might be expected to attract much higher viewer numbers across a wider range of disciplines. One video may be produced purely to satisfy the ego of a difficult speaker, so the number of viewers it attracts may not be important, whilst another video may be intended to be used intensively a year down the line when the organiser is promoting the next event, so the viewer numbers before that point would be less significant.

It may be safe to assume that in most cases the higher the number of views and therefore the lower the unit cost per view, the better. However, unless the organiser has clearly defined what they intend to use the video for and how they hope it will be used over time, it is difficult to map viewer statistics against the organiser’s aims to ensure that the resource is actually delivering the type of return required.

Increasing video ROI over time

Here are a few strategies I have been using, or intend to make greater use of in the future, as a result of my research in this area:

1. Have a plan

All videos are not created equal. When you plan which sessions of your event to record, or who to interview, consider who you expect to watch those videos and how you intend to use them. Each planned video should be considered separately, as they may all have very different aims. As an event amplifier, this will help me to plan how to use the footage, and how to measure the success of the amplification of that footage.

2. Embed in multiple places

I have recently taken to embedding event materials on Lanyrd as well as on official event websites to help increase exposure. However, there may be other forums where specific videos may be useful to both the event community and any overlapping communities. Work with your event amplifier to identify a list of appropriate places for each individual video. As a professional event amplifier working across a number of different overlapping disciplines, I am in the fairly unique position of being able to offer additional value to event organisers by effectively cross-pollinating between communities. You should also encourage your speakers to share video footage of their own sessions on their own websites or forums. Don’t just embed it on your event website and hope for the best!

3. Know what you’ve got

If you have a list of materials that is easily accessible, there is more chance that you will be able to share a relevant video should it become topical again at any point in the future. There are automated ways to measure the buzz around particular keywords or phrases and receive an alert, which could help trigger you to share a particular video. More on this in a later post.

4. Plan to reuse

Identify periods where news stories may be scarce, such as the summer period, and schedule social media updates featuring videos that may have otherwise dropped off the radar. This works particularly well for shorter videos, such as video interviews and or lightning talks, which you can promote for people to watch as a kind of “lunchtime snack”. Remember to explain what the video is and why it is useful to help sell it to your followers. If you want to promote longer videos, pull out the key highlights to help potential viewers decide if it really is worth the hour of their valuable time required to watch in full.

What have you tried?

I would be very interested to hear about any other strategies people have used to maintain interest in videos from events over time. Please leave a comment if you have tried anything out – successfully or otherwise!

Back in those early, halcyon days when a live video stream from an event in your sector was a rare and precious thing, one could build and they would come.

Back in those early, halcyon days when a live video stream from an event in your sector was a rare and precious thing, one could build and they would come.

Now, as more people cotton on to the benefits of live streaming, there are a plethora of streams available from around the world, together with hours of useful content in the form of recordings.

Whilst this increased awareness of events online is great for me as an event amplifier, it is creating a competitive environment where it is very difficult to convince people to commit the time to watch a particular live video stream for any length of time.

We are fast approaching the point where organisers who want to invest in providing a live video stream from their event need to work as hard to attract an audience to that stream as they do to attract delegates to attend their event in person. I have been receiving more and more requests for live video streaming at events, which is great, but I have reached the conclusion that I need to be working more closely with event organisers from an earlier stage to understand what they want to get out of the live stream, and to provide more strategic advice about the ways they can use a live video stream to meet their aims.

This might include…

1. Advertise the stream early

People still value face-to-face meetings, so if they CAN come to your event in person, they will. Knowing there is a live video stream available will not sway the decision for most people.

However, most event organisers spend so much time panicking about “bums on seats” that they fail to promote their live video stream until very close to the event – or worse, leave it until the day of the event to announce that a live stream will be available! By that stage, everyone who might have watched remotely has a full schedule for the day and can’t take time out, or doesn’t get the message about it until too late. As a result, the stream gets low viewer numbers. The organiser is disheartened and feels that the stream has not delivered value for money.

I always advocate advertising the live video stream early, and promoting the link to people who have signed up to attend in person. Many organisations will not foot the bill for multiple team members to attend an event, either for operational reasons or due to the cost involved. The one or two team members who are permitted to attend your event are the perfect people to promote your live video stream to the other members of their organisations. The same thing works with speakers – if the speaker knows they will be streamed early enough, they can promote this to their colleagues and associates to help raise their own profile.

It is essential to have a link to promote from an early stage, even if this is just a simple holding page. Circulating a link for the stream will increase the chances that someone who hears about the stream will be able to find it easily or bookmark it for reference.

2. Advertise an online programme

It is important to help your remote audience to choose which sessions they particularly want to watch so they can clear space in their schedule to watch. Few people will be able to watch the whole event from beginning to end, so providing a clear programme of what will be streamed when will help the remote audience to dip in and out to follow the sessions of most value to them.

It is also useful to provide some guidance about how to participate in an online event, including practical advice about following live, such as blocking the time out in their diary, telling colleagues that they will be busy, or finding an alternative space to watch via a laptop or tablet device where they won’t be disturbed.

3. Think about a broader audience

For your physical event, you may have an audience profile that is fairly specific, or at least contains people with a lot of overlapping interests. After all, they have to be interested in being together and hearing the same kind of material for the whole event. Your remote audience can be much, much broader. A member your remote audience may only be interested in one particular session. They would never travel to your event and for that one talk, and you probably never thought to market the event to them as a result.

I believe this is one of the key opportunities missed by most event organisers when they live stream their event. They assume that the remote audience will be very similar to their local audience in every way. However, if you think about each of your sessions in isolation, you might identify a broader audience who may wish to watch just that one talk. You can then reach out to those people through different channels to promote the live stream of that one talk and almost make a mini event of it. The speaker may be able to help with this.

4. Think strategically

Finally, it is important to consider how you could use your live video stream to meet strategic goals. This might involve creating links between other events happening at the same time, perhaps by streaming a session to another workshop or conference, or by establishing “pods” where small groups of people come together to watch the stream and engage with the discussions.

You also need to be really clear about what you hope to achieve with a live video stream. What will success look like? How many viewers would you be happy with? Are there any sessions where you would hope for higher viewer numbers? Mapping out these aims for the live video stream will help to establish how much effort needs to go in to promoting the the stream, and what strategies you might use.

Conclusions

Live video streaming can be an extremely effective and cost efficient way of opening up an event to a much wider audience. However, as with most event amplification techniques, as we move from a period of experimentation into a period of common practice, event organisers need to be clearer about their aims and be prepared to work with their event amplifier more closely to help make the most out of such a service.

I would be very interested to hear if anyone has tried any alternative techniques to those described above to help generate an audience for a live video stream. Please leave a comment below to share your own experiences.

I have written previously about my discovery of Storify and the role I could see it playing in amplified events. Since then, Storify seems to have taken off at a rapid pace, with many event organisers using it in different ways support their event.

With this in mind, I felt it was high time I revisited the tool and documented the various ways I have been using it to help amplify events.

1. Storify as an event summary tool

A number of event organisers use Storify as an event summary tool. Most seem to use it as a means of displaying selected tweets or entire Twitter discussions, and add very little in the way of contextualisation or alternative content. Whilst this is overwhelmingly the most popular use for Storify, it does seem to defeat the nature of the tool as a means of “curating” social media content.

From my earliest dabbling with Storify, I have aimed to use it to tell a story, by adding illustrations from Flickr, useful resources such as videos or slides, contextualising headings and original commentary to help bring the materials together. Particularly since the arrival of embeddable tweets, one could argue that this type of summary could be recreated perfectly well on most blogging platforms, without the need for Storify at all, except that it provides an easy interface to search different streams of social media content, then drag and drop it into place. However, I have recently worked on a number of smaller workshops, where there is no appropriate blog or platform for such a post to appear, and creating one for the purpose is equally not appropriate. Having the summary hosted separately on Storify allows the organisers to engage with social media, without the need for any more permanent infrastructure to monitor and maintain.

A good example of this is my summary of the Intelligent Buildings and Smart Estates event, which was organised as part of the JISC Greening ICT programme. This brought together all of my coverage from the event, including photos, tweets (as @RobBristow) and video interviews with participants. Whilst it is a little on the long side for a Storify summary, it provides an overview of the event in one place.

As an external or consultant event amplifier, using Storify in this way also provides a number of logistical advantages:

- I don’t have to be established as a user on a project or organisation blog

- I don’t get held up by the security settings on someone else’s site or blog, which can often prevent me from embedding materials using iframes

- I can easily notify people I have quoted in my summary of the event, which makes the process more open and spreads the word about the summary much more effectively

- Organisers can send out one link to the Storify summary in their post-event communications, providing once central point for participants to recap or share with their colleagues

2. Storify as an online programme

At IWMW 12, I experimented with using Storify to create an evolving programme – that is, a page that starts as a programme and gradually becomes a summary as each session is completed. This was originally created for our own logistical benefit as event amplifiers: we needed a way of collecting the speakers’ slides together in a single place so that my colleague could show them as part of the live video stream.

Inspired by the JISC 2011 conference’s use of an online programme to help inform remote delegates about live streamed content, I decided to publish this and use it throughout the day as a tool for the remote audience. As each session finished, I added a one paragraph summary of the session and a selection of the most pertinent tweets, to help give anyone who missed the session of flavour of what was covered, and what the audience thought about it.

As a result, by the end of the day my programme had evolved into a complete summary of the proceedings. This meant I was promoting the same link throughout the day and afterwards, thus reducing the number of separate links being tweeted around to various resources, which can often be an overwhelming side-effect of amplification at the end of an event.

The programme/summary could also be included in summaries produced by other participants. Ann Priestley included it in her live blog (also created using Storify).

The two summaries can be viewed here:

Note: IWMW Day One featured mainly parallel sessions, which were not live streamed, and one plenary, which was publicised to the remote audience separately.

3. Backing Up Storify

Storify offers an export function, which allows you to publish the summary to your own blog, where it appears in HTML. I have created a separate Amplified Event Back Up Blog to collect back up copies of each of my Storify summaries, using this facility. In this way, I can protect my work and my clients from any loss of materials, should anything untoward happen with Storify. So far, the service has been very stable, but I have observed that several of my very early stories are no longer showing up on the site. Storify does not provide any details about how long it will keep users stories available, but it does have perhaps one of the nicest sets of terms and conditions of any online service I have come across. Many online services periodically clear out old content or old statistics, so it is always safer to have a backup that is under your own control.

Conclusions

Further changes in practice may evolve both as the tool develops and as we come up with more diverse ways of deploying it within the amplified event mix. For now, this is one tool that is growing in hype, but needs more widespread intelligent use to really shine as an event amplification tool.

Things have been a bit quiet around here recently. Here’s why…

Last week saw the launch of the JISC-funded Greening Events II Event Amplification Toolkit, as announced by Brian Kelly at the Institutional Web Management Workshop (IWMW) 2012. Brian explains why this was particularly apt in his blog post “Conferences don’t end at the end anymore”: What IWMW 2012 Still Offers.

Last week saw the launch of the JISC-funded Greening Events II Event Amplification Toolkit, as announced by Brian Kelly at the Institutional Web Management Workshop (IWMW) 2012. Brian explains why this was particularly apt in his blog post “Conferences don’t end at the end anymore”: What IWMW 2012 Still Offers.

I was honoured to have collaborated on this project with the Greening Events II team: Debra Hoim, Paul Shabajee and Heppie Curtis, and Brian Kelly from UKOLN, with support from the JISC Greening ICT programme manager, Rob Bristow.

The toolkit sets out to define amplified and hybrid events, to provide best practice for engaging with some of the most popular types of tool, and to help organiser to rethink the type of event that would best suit their goals. It can be used as a whole as an introduction to amplified events or as a series of briefing papers, and contains a selection of case studies to illustrate how the principles described have been used in practice. Whilst the report acknowledges that amplifying an event may not actively reduce the environmental impact of an event, by reducing delegate travel, it shows how amplification can help to expand the audience of that event without the corresponding increase in carbon and other greenhouse gas emissions. The report also includes some pioneering work by Paul Shabajee exploring how how the carbon costs for amplified events can be estimated.

You can download the report in full here.

My key aim in this report was to document key amplification concepts and best practice principles in a way that is accessible to event organisers who are new to these types of activity, and in a way that will not become outdated as the range of third party social media services evolves. To overcome this challenge, I offered a series of briefing documents that cover different types of amplification activity rather than specific tools, including:

- Live Video Streaming

- Live Discussion Tools

- Resource Sharing Tools

- Event Capture Tools

The report also highlights the different perspectives held by the various actors within an event (speakers, suppliers, local and remote audiences), and provides an amplified event planning template complete with guidance about how to assess the risks associated with amplification.

The premise of the report fits in with the wider “openness” agenda, as I discussed recently in a guest post for the UK Web Focus blog.

Whilst this project has been invaluable to me as a way of assessing how far we have come and consolidating this progress, it has also highlighted a number of lessons, which I hope to investigate further in subsequent blog posts. In particular, it has made me acutely aware that we need to move on from the experimental stages towards more evidence-based event amplification design. To do this, there needs to be a stronger emphasis on collecting better feedback from remote audiences and collecting adequate ongoing data to illustrate the use of amplified event materials over time.

So, lots to ponder!

I will be blogging about some of these lines of thought in more detail over the coming weeks. In the meantime, I hope you all enjoy the Greening Events II Event Amplification Toolkit and welcome any feedback.

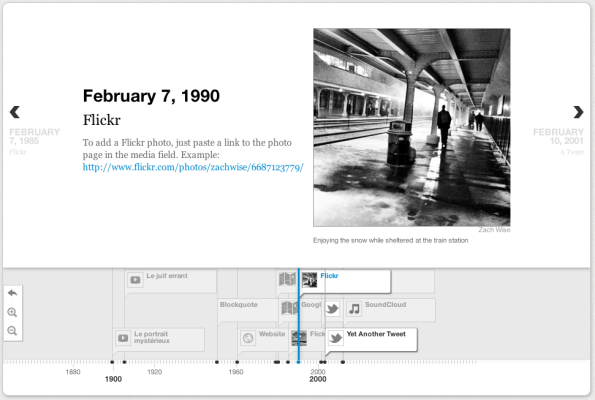

Today has been a day for playing with new tools. My favourite is Timeline, a curation tool that allows you to present a variety of different online media in an interactive timeline. It is very pretty, although it appears to involve a bit of fiddling with code to use – no “drag and drop” interface just yet.

I am at the early stages of playing with this and have yet to make use of it in an event context. However, I did want to reflect on some of the key reasons why this is ticking boxes for me so far…

1. You can self-host your timeline

You can download and self-host all the files you need, so you have complete control. One of the main risks of using third party tools for any aspect of event amplification is that they can change, break or even disappear entirely without any notice. I spend a lot of time devising back up strategies for such tools, and considering the longer term preservation of materials created/collected within them. For this reason, tools that allow export of materials in a reusable format and self-hosted solutions are always preferable, although as I have reflected before there is still the issue of who should be maintaining event materials in the longer term and for how long.

2. They are upfront about known issues

When you are amplifying an event you ideally want to pick tools that work for the broadest range of people so you don’t discriminate based on device or browser (unless they are running IE 6). Arguably, an event amplifier should test all of the tools they plan to use ahead of an event so they know where the support issues are likely to arise and where alternatives may be required for certain sections of the audience. However, this is not always practical for every event and every tool. A clear message about known issues – in this case, a problem with iPhone compatibility and an IE 8 bug – can help enormously.

3. It pulls together multiple media types

Pulling together materials from different sources has been one of the powerful uses of Storify, and Timeline offers a similar level of flexibility, with sources including Twitter, YouTube, Flickr, Vimeo and Google Maps. Whilst event amplification and transmedia approaches favour materials being spread out over different platforms and in different formats to encourage engagement in different contexts, there is still a need to make sense of these resources as part of an overarching narrative and to provide some kind of guided route through the materials to aid discovery. There will be times when a Storify commentary will suit the requirements of an event, and there will be times when a timeline will be the better way to contextualise the materials – particularly when an event is part of an ongoing series. The event that immediately springs to mind is IWMW, which has a history of over 15 years and a plethora of digital materials which could be woven around key external events to show how the event has evolved and responded to the needs of the target audience.

4. It can be used collaboratively

One of my slight gripes with Storify is that it is designed for a single user/curator, rather than offering any group authoring options. Whilst a Timeline can be created by one user editing the JSON code, there is also an option to build a Timeline using a Google Doc spreadsheet. This means that one could choose to create collaboratively by sharing the spreadsheet, which could prove very useful indeed – particularly at large-scale events where there are multiple event amplifiers/journalists covering the proceedings.

Conclusions

I’m quite excited to try out Timeline, despite the slightly daunting coding element. I have been increasingly finding that event organisers are finding value not just in distributing materials to amplify messages to different groups and networks, but also pulling them together into a coherent summary. Another tool in the box to facilitate this has to be worth investigating further. 🙂

A new amplification tool was released onto the unsuspecting iTunes App Store this week: Tweet Buzzword Bingo.

A new amplification tool was released onto the unsuspecting iTunes App Store this week: Tweet Buzzword Bingo.

Tweet Buzzword Bingo is an iPad app allows you to play Buzzword Bingo using a Twitter hash tag. The organiser sets up the game with up to 16 buzzwords and specifies the hash tag. As the audience tweets about the event – be it a conference, workshop, seminar or informal meeting – the game searches the hash tag for the buzzwords and reveals a square each time a buzzword is tweeted. The winner is the person who tweets the most buzzwords.

I have been working with the developer over the last few months to help inform the design and to test the app prior to release. Some time ago, I wrote a blog post about an early prototype version of the game, which was created for the Institutional Web Management Workshop (IWMW) in 2010. I reflected that the game was:

“…designed to encourage the audience to think about and tweet about the main themes of the presentation, thereby actively engaging them not only in the online dimension to the event, but also in the presentation itself in a more practical manner.”

This became the guiding principle behind the iPad version of the game. By encouraging people to tweet more, you are effectively encouraging them to amplify the event to their professional and social networks, thus expanding the reach of the event. Depending how the game is framed when introduced to the players, it could help to stimulate less active Twitterers to tweet a bit more and perhaps open their eyes to the value of Twitter at events.

Active game of Tweet Buzzword Bingo

I demonstrated a development version of the app during my presentation at Online Information 2011 last December. Whilst there were still a few bugs at that stage, the concept worked well. During the previous talk only two people out of the 30 or so in the room had tweeted, one of those being the official Twitter moderator for the session, who was commentating. After challenging the audience to a game of Tweet Buzzword Bingo, this increased to six, thus expanding the reach of my talk.

There has been lots of interest in how the game might be used in a teaching context, rather than just an events context, so I am curious to see how it is applied outside our original design scenario. The process of listening to a lecture and forming a 140 character tweet to summarise a key point is one which demonstrates comprehension as well as increasing engagement, so the game could potentially have a role as an educational tool as well as an event amplification application.

I’m looking forward to trying out the final release version of the app at a suitable event in the near future. However, if anyone has a go with it in the meantime, I’d love to hear how you get on!

________________________

Tweet Buzzword Bingo is available for iPad via the iTunes App Store.

Further information about the app can be found on the Tweet Buzzword Bingo homepage.

You can also find Tweet Buzzword Bingo on Twitter @tweetbuzzbingo.

Over the last few months I have been working with UKOLN to produce an amplified events toolkit as part of the JISC Greening Events II project. This has involved writing up a number of case studies from recent amplified and hybrid events, and creating short briefing papers about some of the common tools and services used in amplifying an event. I was keen to provide a framework for assessing the risks surrounding the use of each tool or group of tools as part of this work, so event organisers can review those risks and take steps to mitigate against them.

The single most embedded tool used to amplify events at the moment is, of course, Twitter. It is also one of the tools that the event organiser has least control over in terms of its use, so it carries with it a whole host of risks that can daunt more traditional event organisers. It therefore seemed like a good tool to start.

My draft risk assessment for the use of Twitter at events includes the following points:

Failure to engage: This can result in audience dissatisfaction going unnoticed, missed opportunities to improve the delegate experience and spread event messages further.

Poor wifi or mobile connectivity: If you know your audience is likely to tweet, check that the venue has sufficient wifi or mobile connectivity to support online activities. Many commercial venues charge high fees for individual access to wifi, so it may be necessary to negotiate conference rates well in advance.

Inappropriate hash tag choice: A clash between hash tags or an unfortunate choice of hash tag can cause embarrassment for the organiser and confusion amongst the audience, who will often suggest competing alternatives. You can mitigate against this risk by checking your choice of hash tag thoroughly using a Twitter search and by checking any acronyms in a search engine. Start using your hash tag well in advance of the event to avoid any last minute clashes with other events.

Spam: Popular hash tags can attract spamming activity, which may be inappropriate. However, most mature Twitter users can spot spam content and filter it out. If you have someone monitoring the hash tag they will be able to report any spammers to Twitter for them to take appropriate action (usually blocking the account).

Mob mentality: On rare occasions the audience may engage in a negative critique of the speaker whilst a presentation is ongoing. Do not to show tweets on a screen behind the speaker whilst they are talking, unless integral to the presentation, and identify any controversial presentations so you can plan how to respond to any difficult situations that may arise.

Several incidents have brought some of these risks home to me recently and have thus informed the guidance I have included in my risk assessment. I want to briefly touch on two of these issues to reflect how my experiences have influenced the thinking behind this draft guidance.

Mob Mentality

An audience bonding together on Twitter to ganging up on a speaker is a very rare occurrence, in my experience, but one which can cause acute embarrassment and can potentially be quite nasty. This type of behaviour can catch you off guard and can be difficult to manage. Do you nip it in the bud with a light hearted put down? Do you let it run its course and leave the audience to self-regulate? Do you scold the audience and tell them to grow up? Is it even possible to strictly moderate what is supposed to be an open backchannel for discussion? Organisers may want to protect their speakers from offence and maintain positive, professional sense of decorum around their events, but there is always a risk that the audience may not conform.

At times, a negative backlash from the audience via the backchannel could have been anticipated by the organisers. Sometimes simply seeing the presenter’s slides in advance and talking to them about what they intend to say will help to identify areas where the audience may take issue, or where particular images or presentation styles may be inappropriate. Organisers don’t need to restrict their speakers from saying contentious things, but they do need to make the speaker aware of the questions/objections that could be raised during the Q&A, and alert their event amplifier so they can plan how best to handle the situation sensitively.

I don’t feel it is appropriate for me to detail the events and circumstances where I have encountered this behaviour so soon after those events. However, I do have archives of the event hash tags concerned to study and learn from, so I may return to this issue at a later stage.

Twitter Spam

I encountered this problem at the recent Leeds Trinity Journalism Week, when I was overseeing the launch of their event amplification. The opening keynote speaker was Jon Snow from Channel 4 News, which sparked a lot of interest and the event hash tag was quickly up into the UK trending topics on Twitter. This was great for the event organisers, but quickly led to an unfortunate side effect: two new mobile phone handsets were launched on the same day, and spammers were jumping onto trending topic hash tags to drive up their own marketing messages. This was an aggressive strategy, making use of Twitter accounts specially established for the purpose with profile pictures featuring scantily clad women.

Part of my role was to monitor the event hash tag and respond to any problems raised by the remote audience watching the event via the live video stream. I ended up spending the morning blocking spammers from every Twitter account I had access to. Unfortunately, this is not an instant fix, and there were times I had to watch a screen full of spam tweets go by before the offending account was blocked by Twitter. The spammer then moved on to another account, and the battle started again.

There is not much the event organiser can do to avoid this type of situation. They just need to be aware that whilst trending is good, it comes with this unfortunate side effect. In this instance, having a dedicated person monitoring the stream meant that we could take action straight away, rather than relying on the audience getting annoyed enough to block the accounts themselves.

Conclusions

Carrying out a risk assessment on something as embedded as Twitter has really highlighted for me some of the pitfalls that can occur and how many things I am juggling in my head when I am supporting the use of Twitter at an event. The risks I have highlighted are really the practical, organisational issues an event organiser would need to plan for, but there are also subtle risks associated with the actual practical use of Twitter to provide a live commentary or to deliver information. I have not touched upon these here, mainly because they overlap with traditional customer service and reporting principles.

This work is still ongoing, so I would be interested to hear if there are any further risks anyone can suggest should be included. Please leave a comment below or tweet me @eventamplifier with any suggestions.

Last Monday I covered my first event since the demise of Twapperkeeper: the LIS DREaM 3 workshop at the British Library. This gave me an opportunity to test Martin Hawksey’s TAGS solution, which makes use of Google Spreadsheets to collect and visualise Twitter hash tags.

Martin’s instructions and video demonstration made it very easy to set up the archive, and I checked in on it several times before the event to make sure everything was working smoothly. I had the added “belt-and-braces” assurance that we were using CoverItLive to display tweets and provide an alternative discussion space during the event, so there was a separate archive of the event in case I made any mistakes during the TAGS set up. User error was the main risk I identified when assessing the TAGS tool, which is based on the established Google Docs platform and benefits from a growing user base and hands on support from the developer.

As it turned out, everything went very smoothly and it really was quite simple to use.

During the event, the TAGS spreadsheet was enabled me to report that we had reached an average of a tweet a minute throughout the event, showed how many re-tweets we had achieved (based on the occurrence of the term “RT”) and showed how many links were shared on the event hash tag #lis_dream3. In addition to this, Martin has created a dashboard which shows some of the basic visualisations we have come to appreciate from Summarizr, which used the Twapperkeeper API. This includes graphs of activity levels on a hash tag and a pie chart showing the proportion of tweets by major players.

I notice that Martin has some advanced features that make use of a Twitter developer key, including the facility to extract links shared in tagged tweets. I didn’t feel quite brave enough to attempt using these features this time around.

However, I did try out Martin’s TAGSExplorer Beta, which creates an interactive visualisation of Twitter conversations from the public link to the TAGS archive. You can use this to replay conversations surrounding particular people. From this, I was able to separate out those who were using Twitter to hold conversations on the #lis_dream3 hash tag and those who did not use Twitter in this way, which in this case was about 50:50.

You can view this in motion here

I’m really impressed with TAGS and the TAGSExplorer tools, and can definitely see a role for these in feeding back to event organisers about Twitter activity surround their events. However, the difficulty I face is how to interpret the information provided by such tools to provide event organisers with something meaningful to inform future decisions to amplify an event. This may mean carrying out more comparative work between events to show what is typical and where it can be argued that an event has made a bigger impact than other, similar events.

Ultimately, many event organisers want to know how many people have been “watching” the event on Twitter. Whilst this may not be possible, being able to show the potential reach of the event based on the follower numbers of each person tweeting or re-tweeting a tagged tweet would be a really useful statistic to help address this request.

I’m looking forward to playing with the TAGS tool further at future events and seeing what the talented Mr Hawksey comes up with next!

Over the last year I have seen an increasing number of requests for my amplification services at small workshop sessions, generally ranging in size from 20-40 participants. Many of the organisers of these workshops ask me to produce videos of each presentation in their programme, as part of the permanent record of the event. These are normally uploaded to video sharing sites such as Vimeo or YouTube.

Over the last year I have seen an increasing number of requests for my amplification services at small workshop sessions, generally ranging in size from 20-40 participants. Many of the organisers of these workshops ask me to produce videos of each presentation in their programme, as part of the permanent record of the event. These are normally uploaded to video sharing sites such as Vimeo or YouTube.

Unlike larger conferences, where you often find professional AV assistants, high quality PA systems, proper staging, lighting etc., smaller workshops are often conducted in situations which make filming more challenging. Aside from the difficulties of positioning a camera in what may be a poorly lit room (for the purposes of filming) without obstructing the view of participants in the room, we often find it is particularly difficult to get good quality sound at such events.

Beautifully lit, HD quality video is obviously very nice, but when you are producing video of a presentation for the web, the sound is the most important aspect to get right. People will often play videos or live streams on their computer whilst working on other things, making the picture quality of the video secondary to the quality of the audio. This is an issue I have been struggling with for a while, along with my husband Rich, who often assists me at events and does much of the video post production for me. After spending hours trying to rescue some particularly poor audio from one such event, we decided that we needed some help and a strategic rethink of how we approach covering these events.

We enlisted the help of a friend, Gavin Tyte, a professional performer who and a former music technology teacher who does all of his own sound engineering and video production. This is the kind of thing Gavin normally gets up to:

Gavin was able to give us an audio surgery, in which we assessed our existing equipment, discussed the different scenarios we face at events when we are required to record audio, and established what we can do to improve both our raw recordings and the post production of our audio.

This was quite a wide ranging session, so I want to focus in on just one of scenarios we discussed, and the changes in practice we hope to adopt.

One of our most pressing issues was how best to mic a speaker at a small workshop. We often find that when a local PA system exists (and is turned on!) the microphone is fixed to a desk or lectern. There is normally no way to take a direct feed from this, so we have to position our shotgun microphone next to a PA speaker close to the video camera. However, speakers rarely think to reposition the PA mic to an appropriate height before they start talking, and often wander away from it all together. This is very easy to do in an informal setting, particularly if the room and audience are of a size where the speaker can be heard perfectly well with minimal projection.

The same thing happens when we put our shotgun mic near the front to pick up the speaker’s voice directly. The shotgun mic is directional, and ideally needs to be as close to the speaker as possible to pick up the best possible sound. If the speaker moves around, or chooses to stand in a position away from the mic for the majority of their talk, I have no opportunity to turn it or reposition it without obstructing the view of the audience.

Gavin advised us to forget about any local PA system completely and concentrate on collecting the sound directly, preferably using a good quality wireless lapel mic with the receiver plugged directly into the camera. A top of the range wireless lapel mic set can cost upwards of £500, but there will be cheaper solutions. He also suggested using the shotgun mic in addition to the wireless lapel mic, positioning this close to the speaker and attaching it to an iPod or similar device. This will create a separate back up recording, which can be used to supplement the audio from the lapel mic in the event of any problems. This allows us to take full control of the quality of the audio we get, without relying on equipment in the room or speakers making correct use of such equipment.

Whilst this technically solves our problem, I believe there is a human issue to be addressed here too. I need to encourage organisers to brief their speakers more effectively, not just so that the remote audience can hear them on the recording (particularly in the event that we need to rely on the back up audio for any reason), but also to help improve the accessibility of the event as a whole. After all, anyone in the physical audience who may be hard of hearing will experience exactly the same problem as the remote audience if the speaker fails to use the PA equipment provided effectively.

We discussed lots of other topics in our audio surgery and got some really valuable advice from Gavin about working with professional sound engineers at conferences to get the best possible feed from a managed desk, and where replacing key pieces of kit with high quality equipment will really pay off. Whilst he was able to give us training to make better use of our software for post production, he was very firm: you can’t repair a bad recording. It is vital to take control and invest in the right equipment to get the very best original recording possible. This has to be our focus at smaller events over the next few months to improve the quality of what we produce for our clients.

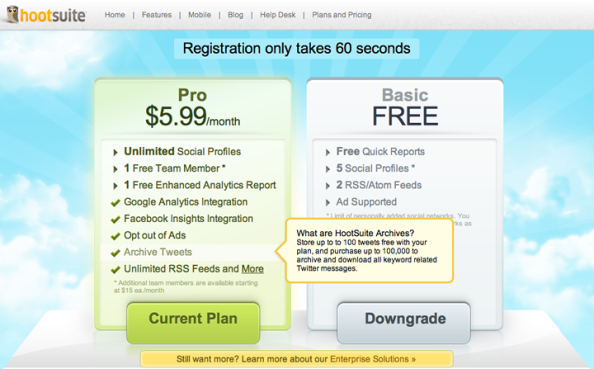

Thanks for using Twapper Keeper – we look forward to seeing you at HootSuite.”

So begins the long goodbye to an incredibly useful service.

With just a few clicks, even the most casually interested observer could create a public archive of a Twitter hash tag. This was immensely useful to me as an event amplifier, as it allowed me not just to provide visualisations, using tools like Summarizr, but also provided a rich pot of data about Twitter interaction at amplified events.

Brian Kelly’s recent post, Responding to the Forthcoming Demise of Twapper Keeper, outlines the processes currently available for migrating Twapper Keeper archives and suggests some of the factors which may influence decisions by key stakeholders about which archives should be preserved. In this post I will summarise my own response to the announcement, which overlaps with much of Brian’s advice, and consider some of the implications of the withdrawal of Twapper Keeper from my perspective as an event amplifier.

“My” Archives

I feel a personal obligation to rescue the archives I have created myself, but also have a vested interest in the preservation of a number of archives created by others – particularly those which record discussions surrounding events where I have been involved. After all, those archives effectively represent a record of my work to date, as well as a valuable evidence base for future research into the use of Twitter at amplified events.

As a general rule, I do not create Twapper Keeper records for events myself, as I am outside of UK HE and was therefore only permitted to create a limited number of archives. In fact, I have only ever created one archive myself. Instead, I encourage clients to create an archive for their event, as they are (in the main) part of UK HE establishment and are therefore not subject to the same restrictions. Unfortunately, this strategy means that I cannot necessarily rely on someone else backing up archives that interest me before Twapper Keeper disappears, so I have chosen to undertake this myself.

I have used Martin Hawksey’s Google Spreadsheet tool, described in his post: Free the tweets! Export TwapperKeeper Archives Using Google Spreadsheet. I will also take a series of screenshots from Summarizr about each archive to serve as a visual summary of the data contained within the archive, for reference purposes.

For the record, the archives I have created so far are:

Hashtag |

Event |

| #lis_dream1 | The LIS DREaM Project Launch Event, July 2011 |

| #idcc11 | The 7th International Digital Curation Conference, December 2011 |

| #idcc09 | The 5th International Digital Curation Conference, December 2009 |

| #iwmw11 | The Institutional Web Management Workshop 2011 |

| #iwmw10 | The Institutional Web Management Workshop 2010 |

| #devxs | DevXS: Student Developer Hackathon, November 2011 |

| #dev8d | Dev8D: The annual DevCSI Developer Happiness Days |

| #a11yhack | DevCSI Accessibility Hack Event, June 2011 |

| #jisc11 | The JISC Conference 2011 |

| #jiscrim | JISC Research Information Management Final Project Event, September 2011 | #jiscres10 | JISC Future of Research Conference, October 2010 |

| #jiscres11 | JISC Research Integrity Conference, September 2011 |

| #ukolneim | UKOLN Social Media Metrics workshop, July 2011 |

| #uxbristol | UXBristol: Bristol Usability Conference, July 2011 |

| #p1event | The Power of One, November 2011 |

Upon revisiting Martin’s post, I find that he is now asking people to help co-ordinate the effort to rescue archives by sharing those they have created using his method. Several of the above already appear, but I have added the remainder to his catalogue.

I will be contacting the organisers of each event to make them aware of the changes and to share the link to the relevant archive, as a matter of courtesy. I will also be making a local copy of each archive to store offline with the other materials from each event.

The Future

I am a Hootsuite Pro customer, so I investigated my options to continue using the Twapper Keeper functionality in its new incarnation. Information about the new feature is not yet easy to find on the Hootsuite website, but I did eventually find this how-to explaining the new process. Interestingly, I also found instructions to download your archive, a feature that Twitter demanded that Twapper Keeper remove back in March of this year, and a pause/resume archiving function.

There is of course, a catch. A Pro customer (paying $5.99 per month) can archive only a measly 100 tweets, or purchase a bolt on to archive up to “100,000 tweets and download all keyword related Twitter messages”. When I attempted to upgrade my plan, I found that 10,000 additional tweets would cost me $10 per month, and 100,000 additional tweets would cost me $50 per month.

Luckily, Martin Hawksey is a master of Google Spreadsheet tools and has created this alternative method of collecting tweets and has provided detailed instructions to archive and visualise Twitter conversations around an event hashtag . I will certainly be making greater use of these tools for future events.

As I have mentioned before, you can use CoverItLive to collect tweets and export them as an RSS feed. I tend to have this running as an incidental back up to Twapper Keeper at events, as it is my preferred method of offering interactive access to amplified discussions for participants who choose not to use Twitter. However, I tend to run a CoverItLive event only for the duration of the event itself, so this does not preserve the longer tail of the discussions.

The code for Your Twapper Keeper is still available which enables you to create your own local version of Twapper Keeper for personal use, but at the time of writing the accompanying website appears to be unavailable, so it is not clear whether this tool will remain supported.

Update: John O’Brien has just let me know that the latest version of Your Twapper Keeper is available here.

Reflections

Once again, this is a pertinent reminder of the fragility of using third party online services. However, it also raises a questions about who should care for amplified event materials stored online after the event, and for how long. On this occasion I had a personal interest in seeing these archives migrated safely to an alternative site, but in general I have no contractual obligation to ensure the longevity of materials from the events I amplify. Should I, as a professional event amplifier, be committing to monitor online services and alert previous clients if their materials are at risk? Should I be migrating the content myself? If I take on these responsibilities, how long should they last? And how should I charge for such a service? Even with Martin Hawksey’s incredibly simple and timely solution to retrieve archives from Twapper Keeper, it has still taken a not-inconsiderable amount of time to assess the implications of the changes, create the duplicate archives, share them, and communicate with the event organisers to notify them. Managing the withdrawal of other services may take even longer.

This experience has reinforced the need to consider these issues and to ensure that there is a clear agreement in place with my clients covering whether they want materials from their amplified event to be maintained over time, what should be saved in the event of a service closure, and who should be responsible for migrating that content. My concern is that many event organisers only see short term value in amplification, and may not be fully conversant with the issues. There may also be concerns from participants in amplified events, who agreed to one thing at the time and may not want their contributions migrated to a different environment, which they may have cause to distrust.

Conclusions

I feel like I have waded into a bit of a quagmire here. Many of these issues will undoubtedly be debated in time and at the moment there are so many questions that I could end up writing an EVEN LONGER post just to explore them. But not today.

However, I would very much welcome the perspectives of any event organisers who have used Twitter at their event. Is the long term existence of an archive of those discussions is something you would value? And is the continued availability of online event materials from your event an issue of concern when amplifying their event?